Argo workflow demo at the Kubernetes Community Meeting

Note: This is for an older version of Argo. For the latest information please check out the argoproj GitHub repo: https://github.com/argoproj/argo

and the recent TGIK demo of Argo by Joe Beda:

https://www.youtube.com/watch?v=M_rxPPLG8pU&start=859

This post walks through the Argo demo we showed at the Kubernetes Community meeting on September 14th.

The demo showed how to quickly create and run Argo workflows from the command line. We also showed how Argo artifacts and fixtures increase the power and usefulness of container native workflows on Kubernetes.

We’d like to thank everyone for their feedback and encouragement! If you missed the meeting, here are the relevant links.

- Kubernetes Community Meeting Working Doc

- Meeting recording (the demo starts at 1 min 30 seconds)

- https://github.com/argoproj/argo

Demo walkthrough

We forked the influxdb GitHub project and added a .argo directory with a few Argo templates.

An Argo workflow template has three main sections:

- Header

- Inputs and outputs

- Steps

In our demo, we run a workflow called InfluxDB CI that does continuous integration forinfluxdb. It has two input parameters COMMIT, which is the commit ID to build, and REPO which is a URL to our forked GitHub repo. Note that the default values of these parameters are set to %%session.xxx%% This means that the values will be filled in based on the session that initiates the workflow. Usually, sessions are initiated from the CLI, GUI, or an event trigger.

In the above, the InfluxDB CI workflow consists of four steps: CHECKOUT,INFLUXDB-BUILD, INFLUXDB-TEST-UNIT, andINFLUXDB-TEST-E2E. Steps that are listed as a part of the same YAML array, denoted with the — symbol, are run in parallel, e.g. the INFLUXDB-BUILD and INFLUXDB-TEST-UNIT steps above.

Note the use of artifacts to pass the output of one step as input arguments to a subsequent step. I find it useful to read the artifact references from right to left, so %%steps.CHECKOUT.outputs.artifacts.CODE%% can be read as the CODE artifact that is generated as an output of the CHECKOUT step.

Let’s take a look at the INFLUXDB-BUILD step which invokes the influxdb-build template in more detail.

The influxdb-build template has one input which is the CODE artifact and generates one output, which is the INFLUXD artifact. The input artifact will be placed at the path /go/src/github.com/infuxdata/influxdb and the output artifact, which is simply the InfluxDB binary built by the template, will be found at /go/bin/influxd. The template uses the golang:1.8.3 image from DockerHub to perform the build.

Now let’s see how fixtures work look at theINFLUXDB-TEST_E2E step which uses the influxdb-test-e2e template.

The influxdb-test-e2e template has one input artifact called INFLUXD. Note that this artifact is not mapped to any directory path in this template because the artifact is simply passed to other steps in the workflow and is not actually consumed by the workflow itself.

The workflow declares a fixture called INFLUXDB by running the influxdb-server template. By declaring the influxdb-server template as a fixture, we ensure that the InfluxDB server will be started before running the workflow steps and will be destroyed once the workflow is finished. Think of it as a convenient way to manage the lifecycle of services needed during the running of a workflow. Note that we can refer to attributes of the fixture such as it’s IP address (e.g.%%fixtures.INFLUXDB.ip%%) from steps in the workflow.

Let’s take a look at the influxdb-server template to see how it is implemented.

Well, that was anti-climactic! All it does is run the INFLUXD artifact that we already built using a debian DockerHub image :-)

Ok, now that we’ve seen what an Argo workflow looks like, let’s run it using the following Argo CLI commands.

- argo job submit

- argo job list

- argo job show

- argo job logs

Here we go!

Something I really like about Argo is that if your project repo is public, you don’t even need to “add” the repo to Argo. You can just fork the public repo, add a .argo directory and then start running workflows without doing any additional configuration. By using the --local flag with the argo job submit command, you don’t even have to commit your changes. The local copy of templates from the .argo directory will be used instead of the committed copy. This avoids cluttering your repo with useless intermediate commits while you are debugging your workflow.

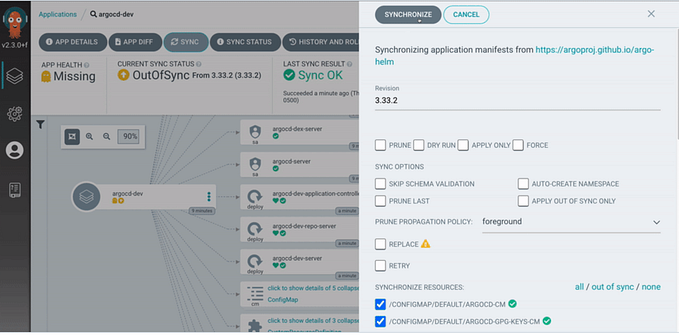

Finally, note the http link highlighted in the above example. If you click on the link, it will open a job status page in your web browser.

From the job status page, you can see a realtime graphical display of your running workflow, browse the YAML for each step, download logs and artifacts, and even shell (e.g.sh or bash) into running steps.

One last thing, if you click on the Job Summary, you will see some stats for the job including how much it cost to run the job! I’ve heard people say that developers don’t care how much things cost but I find it very useful for optimizing workflows and getting code to run efficiently.

If you’ve read all the way to the end, thank you! Please give Argo a try and here is a video of the demo (starts at 1 min 30 seconds)

Ed Lee is a cofounder of Applatix, a startup committed to helping users realize the value of containers and Kubernetes in their day-to-day work.